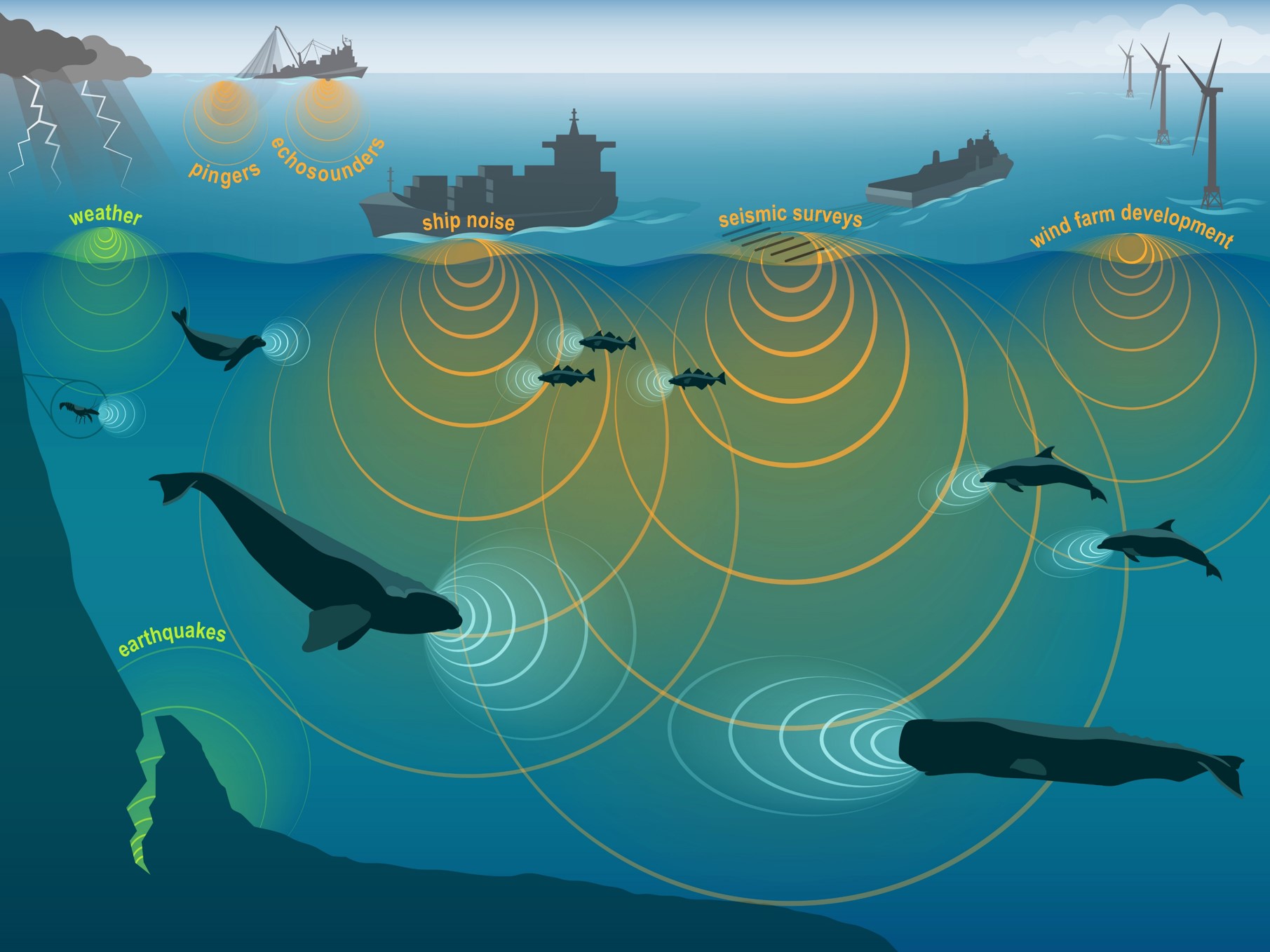

Depuis une 30E d’années, l’acoustique passive (PAM) est une méthode d’observation largement utilisée pour détecter, identifier et parfois localiser les cétacés. Cette méthode est non-invasive et évite le biais de la présence humaine, et permet des captations sur de très longues périodes (de plusieurs jours jusqu'à plusieurs années). De plus, les avancées des dernières années en terme de machine learning appliqué aux signaux acoustiques permettent aujourd'hui d'envisager l'application de multiples méthodes complexes pour traiter automatiquement ces très grandes quantités de données.

Cependant, des problèmes ont été identifiés par la communauté scientifique qui limitent encore aujourd'hui les possibilités dans les analyses :

Dans ce contexte, nous cherchons donc à comprendre et prendre en compte ces difficultés pour développer des méthodes automatiques appropriées pour une utilisation par des biologistes, écologues ou comportementalistes pour l'étude des cétacés.

Keywords : Underwater Acoustics, Signal Processing, Deep learning for Audio, Big Data, Passive Acoustic Monitoring, Marine Bioacoustics, Citizen Sciences, Manual Annotation

Credit : NOAA Fisheries

G. Dubus, M. Torterotot, J. Béesau, P. Nguyen Hong Duc, D. Cazau, O. Adam (2023, June). Better quantifying inter-annotator variability: A step towards citizen science in underwater passive acoustics. In OCEANS 2023-Limerick (pp. 1-8). IEEE. doi:10.1109/OCEANSLimerick52467.2023.10244502

Deployments of underwater passive acoustic recorders have been widely used to study marine biodiversity, especially to detect vocal cetaceans. To process the huge amount of data collected, automatic detection and classification methods are necessary. Recently the development of such methods, which includes training and then testing the models, is mainly based on so-called ground-truth labels, obtained by manual annotation of audio files.

However, manual annotation is a difficult and time-consuming process because of the large size of the datasets, the large diversity of the sounds, their unfamiliar representation, the variant quality of the acoustic recordings and the variability in human appreciation. These different factors induce non-negligible differences from one annotator to another, and better quantifying and understanding such differences is capital to make progress in machine learning applications.

On this topic, the inter-annotator variability is investigated on three multi-annotator annotation campaigns performed on different marine bioacoustics datasets. Each of them gathered more than 10 annotators with different profiles, from novices to field experts, covering different annotation tasks, different geographical areas and varieties of sound classes. From this multi-annotation, this work enhances the understanding of the inter-annotator variability through the kappa-metrics. In a second part, from a grouping method of annotation based on a majority vote, a drastic reduction of the potential errors in the annotation from novice annotators is observed. This last observation enlightens the possibility of using citizen sciences to overcome the lack of annotation, while maintaining a quality of annotation expected by an expert.

G. Dubus, M. Torterotot, A. Gros-Martial, P. Nguyen Hong Duc, D. Cazau, O. Adam, From citizen science to AI models: Advancing cetacean vocalization automatic detection through multi-annotator campaigns, Ecological Informatics, Volume 81, 2024, 102642, ISSN 1574-9541, doi:j.ecoinf.2024.102642.

Continuous underwater Passive Acoustic Monitoring (PAM) has emerged as a strong tool for cetacean research. To handle the vast volume of collected data, it is essential to employ automated detection and classification methods. The recent advancement of deep learning, involving model training and testing, requires a large amount of labeled data. These labels are derived through the manual annotation of audio files often reliant on human experts.

Based on an annotation campaign focusing on blue whale calls in the Indian Ocean involving 19 novice annotators and an expert in bioacoustics, this study explores the integration of novice annotators in marine bioacoustics research, through citizen science programs, which could drastically increase the size of labeled datasets and enhance the performance of detection and classification models. The analysis reveals distinctive annotation profiles influenced by the complexity of vocalizations and the annotators' strategies, ranging from conservative to permissive.

To address the challenges of annotation discrepancies, Convolutional Neural Networks (CNNs) are trained on annotations from both novices and the expert. The results show variations in model performance. Our work highlights the importance of annotation guidelines encouraging a more conservative approach to improve overall annotation quality.

In an effort to optimize the potential of multi-annotation and mitigate the presence of noisy labels, two annotation aggregation methods (majority voting and soft labeling) are proposed and tested. The results demonstrate that both methods, particularly when a sufficient number of annotators are involved, significantly improve model performance and reduce variability: the standard deviation of the area under PR and ROC curves fall under 0.02 for both vocalizations with 13 aggregated annotators, while it was at 0.17 and 0.21 for the Blue Whale Dcalls and 0.05 and 0.04 for the SEIO PBW vocalizations with all annotator separately. Moreover, these aggregation methods enable the training of models using non-expert annotations that achieve performances of models trained with expert annotations. These findings suggest that crowdsourced annotations from novice annotators can be a viable alternative to expert annotations.

Paper in preparation

Automatic detection of cetacean vocalizations from passive acoustic monitoring (PAM) datasets using machine learning remains a challenging task. The large diversity of sound types and variability of surrounding soundscapes and marine environments figure among the numerous complexity factors. The scarcity of vocal activity of many species within the long-term time series recordable with PAM adds the difficulty of addressing imbalanced datasets. Furthermore, the complexity of manual annotation results in incomplete and/or noisy labels, which further complicate the classification/detection task. While open-source annotated datasets have already been released in the DCLDE community, classical methods based on supervised learning are still limited, especially in their capability of generalization.

Recently, contrastive learning methods have shown great performance in automatic detection and classification of images and sounds, and outperforms classical supervised learning (i.e. the classical CNNs + FCL), notably in cases of imbalanced datasets, common in PAM datasets. Those methods focus on training models to acquire valuable representations, emphasizing interpretability and latent features. The objective is to cultivate an embedding space where similar data samples are positioned closely, while dissimilar ones are distanced from each other. In a second step, the detection/classification task is done from this embedding space.

In this study, we explore the potential interest of such methods in underwater PAM applied to low-frequency vocalizations of Antarctic fine whale and blue whale, within the largest public labeled acoustic dataset, especially dedicated to those species (Miller, et al., 2021). The supervised contrastive learning is compared with the most commonly used methods in the DCLDE community. This application is done using validation sets from different geographical areas based on several underwater PAM datasets (with examples based on DCLDE datasets from previous editions), to evaluate the generalization capacity of such methods.

The general objective of this work is to put on the scope of the PAM community one of the newest methods for automatic detection and classification.

Paper undert review - Dubus, G., Torterotot, M., Beesau, J., Cazau, D., Dupont, M., Gros-Martial, A., Loire, B., Michel, M., Morin, E., Nguyen Hong Duc, P., Raumer, PY., Adam, O., Samaran, F., APLOSE: a scalable web-based annotation tool for marine bioacoustics.

Emerging detection and classification algorithms based on deep learning models require manageable large-scale manual annotations of ground truth data. To date, the challenge of creating large and accurate annotated datasets of underwater sounds has been a major obstacle to the development of robust recognition algorithms. APLOSE (Annotation PLatform for Ocean Sound Explorers) is an open-source, web-based yet scalable tool which facilitates collaborative annotation campaigns in underwater acoustics. The platform was used to carry out research projects on inter-annotator variability, to build training and testing data sets for detection algorithms and to perform bioacoustics analysis on noisy datasets. In the future, it will enable the creation of high-quality reference datasets to test and train the new detection and classification algorithms.

The emerging Bioacousticians' Days (Journées des jeunes bioacousticiens - JJBA) is a conference on the subject of bioacoustics, the study of the ethology and biology of living beings through sound. This year, the event is organized by PhD candidates and Post docs at UBO/IUEM, ENSTA Bretagne, LAM/Institu Jean le Rond d'Alembert, University of Zurich and France Energies Marine.

Our primary mission is to build and strengthen this community of young researchers. We achieve this by giving them the opportunity to showcase their work, participate in enriching workshops, and attend plenary sessions led by renowned researchers. However, our event is not limited to the academic aspect. We believe in the importance of learning in a playful environment, which is why we choose to accommodate all participants in the same location of the conferences.

What sets our initiative apart is our commitment to welcoming all young researchers who wish to participate with very low registration fees (20€). We believe in equal opportunities, accessibility to knowledge, and the creation of a space where curious minds can thrive without heavy financial constraints.

Around seventy acousticians from around the world (19 countries over 4 continents) brought together during 3 days:

More informations on our website: https://jjbioacoustique.wixsite.com/jjba/en

Sponsors: